Designing for delegation

How agentic digital services could transform interactions with government

Agentic digital services represent a new interaction paradigm that current design patterns are not well-suited to accommodate. Government agencies should act now to prepare for delegation-based service interactions, not by betting on specific AI technologies, which are changing rapidly, but by developing design patterns and institutional capabilities that any delegation system will ultimately require.

The AI agents are definitely maybe coming

The AI agent landscape is awash with ambitious projections and speculative promises. Gartner has placed AI agents at the “Peak of Inflated Expectations” in their 2025 Hype Cycle for Artificial Intelligence, while simultaneously predicting that over 40% of agentic AI projects will be canceled by the end of 2027, due to escalating costs, unclear business value, or inadequate risk controls.

Much of the available data on agents tells an unclear story. While nearly 80% of organizations report using AI agents to some extent, with 82% planning integration within 1-3 years, many vendors are contributing to the hype by engaging in what is referred to as agent washing – the rebranding of existing products, such as AI assistants, robotic process automation (RPA) and chatbots, as “agents” even though they lack substantial agentic capabilities. Investment in agents is surging: AI agent start-ups raised a reported $3.8 billion in 2024, nearly tripling investments from the previous year, but demonstrating value from AI has still proven to be a challenge for some organizations.

At the same time, AI models are demonstrating increasingly sophisticated reasoning capabilities that enable them to complete complex, multi-step tasks with greater reliability. These improvements suggest that the technical foundation for fully agentic services may be maturing even as the market remains filled with some inflated claims.

This uncertainty makes it tempting for government agencies to adopt a wait-and-see approach, but that could be a mistake. Government agencies have historically lagged behind the private sector in adopting new technologies, from e-commerce capabilities in the early 2000s to broadly accessible digital services in the 2010s. This pattern of reactive adoption means that before governments can implement new technologies, the public already has established expectations from their experiences with private sector services. There’s a danger that agencies could repeat this mistake with agentic services, leaving them scrambling to catch up once people expect delegation-based interactions as a standard service option.

The potential to reduce administrative burden

There is an additional factor that requires governments to pay close attention to the development of AI agents – their very real potential to reduce administrative burden. Administrative burden is a concept used to describe the friction that people face when accessing government services, and it represents one of the most compelling use cases for agentic technology should it mature as promised.

Government processes are often complex. People seeking services must often navigate multi-step applications, understand obscure bureaucratic requirements, track deadlines across multiple agencies, and respond to requests for additional documentation. This administrative complexity systematically disadvantages those with limited time, education, digital literacy, or skills in navigating bureaucracy. This complexity is often the reason that some people turn to real world agents (or “expeditors,” or “facilitators” as they are sometimes called) to interact with a government agency and complete a complex task on their behalf.

Consider someone applying for disability benefits who needs to gather documentation from multiple healthcare providers, understand which government forms apply to their situation, coordinate information between different agencies, and respond to follow-up requests within specific timeframes. An autonomous agent could potentially handle much of this coordination, if the technology develops as advocates suggest it could.

The potential improvement in the experience an individual has with their government could be substantial. When procedural complexity serves as a gatekeeper, government services become less accessible to those who may need them most. Agentic services could, if implemented thoughtfully, democratize access by removing these procedural barriers without compromising program integrity.

Why existing design patterns may fall short

Many government agencies are starting to experiment with AI solutions. But these approaches mostly treat AI tools as if they are simply another channel through which the public can interact with a government agency, similar to web or mobile. But if agents become an alternative that people look for, or that agencies adopt because of the potential benefits of mitigating administrative burden, current design approaches will not be adequate. Much of government digital service design assumes individual-controlled, step-by-step interactions. Patterns like progress bars, form validation, confirmation pages, and status updates all presuppose an individual is actively engaged and making decisions, typically through a web browser or mobile app.

The design community has started to think deeply about how AI will change a user’s experience when navigating digital services. But true agentic services represent a fundamentally different interaction model. Instead of people navigating government processes themselves, they will delegate tasks to autonomous agents that can act on their behalf, just as some people do today with real-world permit expeditors. An AI agent helping with business license renewal might encounter unexpected document requirements, discover related permits that are also required, or find fee discrepancies that need to be resolved, all while the person on whose behalf it is working is doing something else.

This autonomous capability will transform the public-government relationship from self-service to delegation. People will need to negotiate boundaries for autonomous action before the interaction begins. They will need transparency about actions being taken on their behalf while they are offline. They will require controls for things like pausing, redirecting, or escalating agent actions when needed.

This new delegation model will create design challenges that don’t exist in traditional digital services. Unlike human representatives who can ask clarifying questions in real-time, autonomous agents will need to operate within pre-defined parameters when people aren’t available to provide guidance. This will require entirely new patterns for helping the public thoughtfully establish those parameters and maintain appropriate oversight of agents.

Setting the foundation for the delegation paradigm

Even with the current uncertainty about agent technology, government agencies should consider developing design patterns for delegation-based interactions. As people become increasingly comfortable with delegation in their personal and professional lives – from virtual assistants managing calendars to automated financial services handling transactions – they will naturally expect similar capabilities from government services. Any system that performs actions for individuals rather than requiring their step-by-step engagement creates delegation relationships that need thoughtful design patterns. Whether powered by sophisticated AI agents, traditional automation, or hybrid human-AI teams, these services will still require people to establish boundaries, understand what actions are being taken on their behalf, and maintain oversight of the process.

Four broad categories of design patterns will become essential for this new service delegation paradigm.

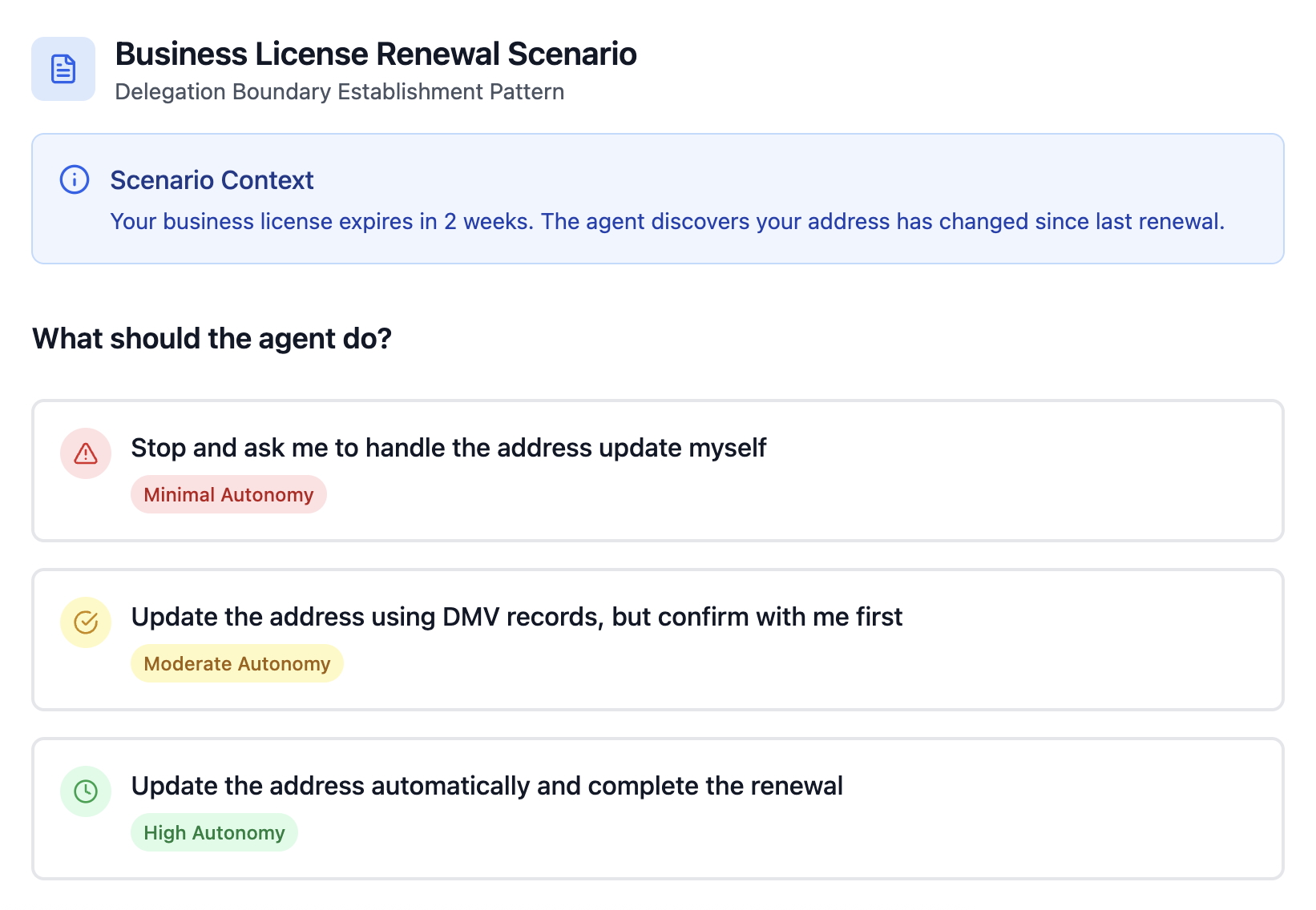

Delegation Boundary Establishment Patterns: These patterns will help people confidently establish appropriate boundaries for agent autonomy. Rather than asking people to configure abstract preferences, these patterns can use scenario-based questions and realistic government service examples to help people understand autonomy implications.

Progressive delegation setup, contextual autonomy scoping, and delegation rehearsal can help individuals make informed decisions about how much independence to grant their agents, critical for building the trust necessary for delegation relationships.

Instead of asking users to configure abstract “autonomy levels,” the example shown here presents a realistic scenario that helps a user understand the practical implications of different delegation boundaries. The user’s choice can be used to automatically configure underlying preference settings that can apply across government services.

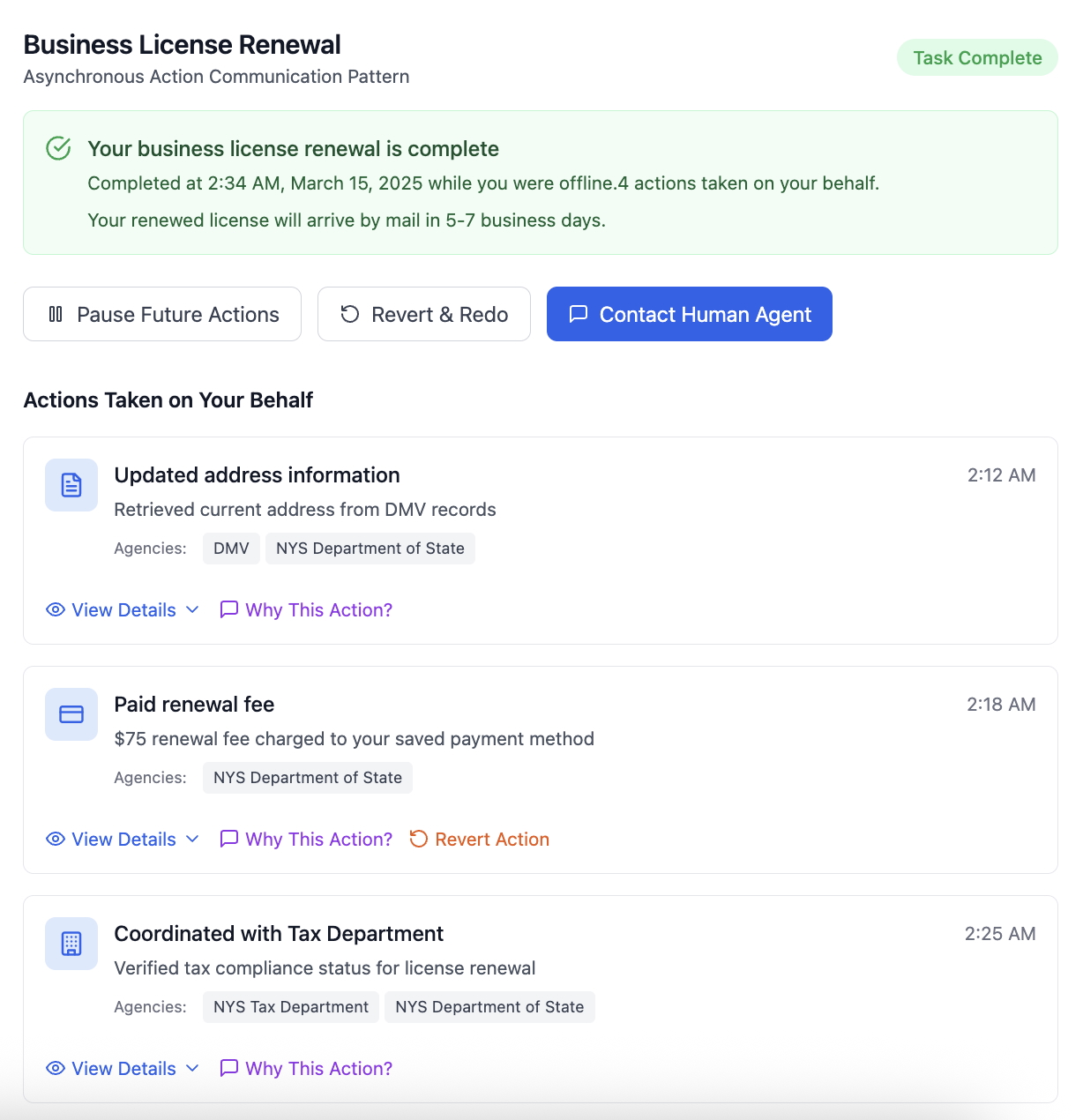

Asynchronous Action Communication Patterns: These patterns will address the fundamental challenge of autonomous action – people need transparency about what happened on their behalf without being overwhelmed by details.

Proactive transparency through standardized agent activity summaries, required notification patterns for different action types, and agent reasoning disclosure can help the public understand autonomous actions. Recoverable autonomy patterns like a universal “pause agent” functionality and standardized “rewind and redirect” options could ensure people can regain control when needed.

The example shown here provides individuals with clear visibility into autonomous actions taken on their behalf while they are out of the loop. Key elements include a chronological action timeline, agency involvement disclosure, reasoning transparency on-demand, and recoverable autonomy controls for actions that can be reversed or modified.

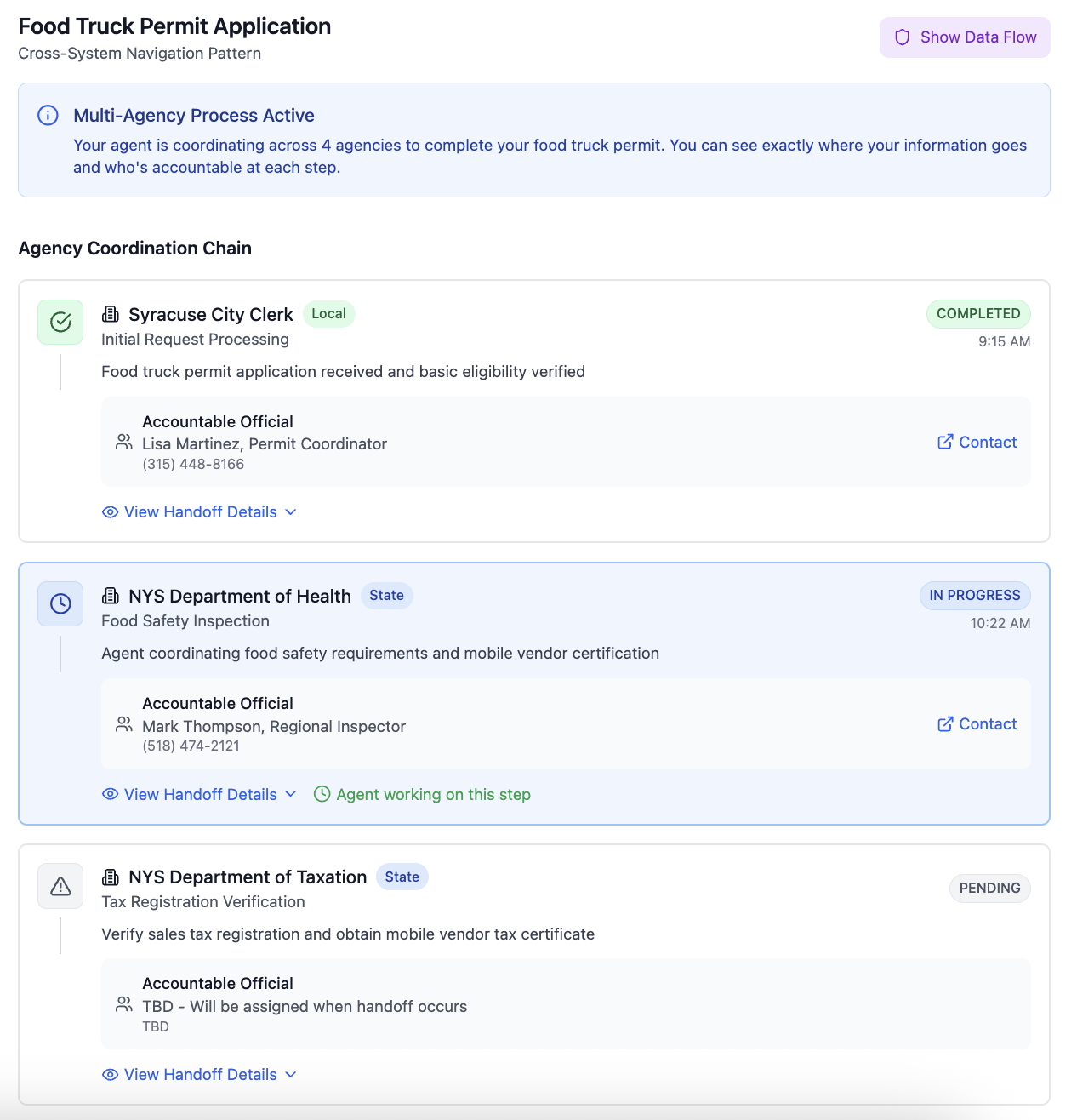

Cross-System Navigation Patterns: These patterns will reflect that government services can sometimes require coordination across multiple agencies and different levels of government.

Interagency handoff transparency, standardized delegation chains, and multi-level government coordination patterns will ensure people maintain visibility into where their information is going and who remains accountable for outcomes when agents work across government boundaries – essential for maintaining public trust in cross-agency coordination.

The example shown here provides visibility when agents coordinate across multiple government agencies and even different levels of government. Key elements include delegation chain visualization, accountability tracking at each step, data sharing transparency, handoff reasoning disclosure, and centralized controls for multi-agency processes. People can see exactly who has their information, why each agency is involved, and who they can contact at each step.

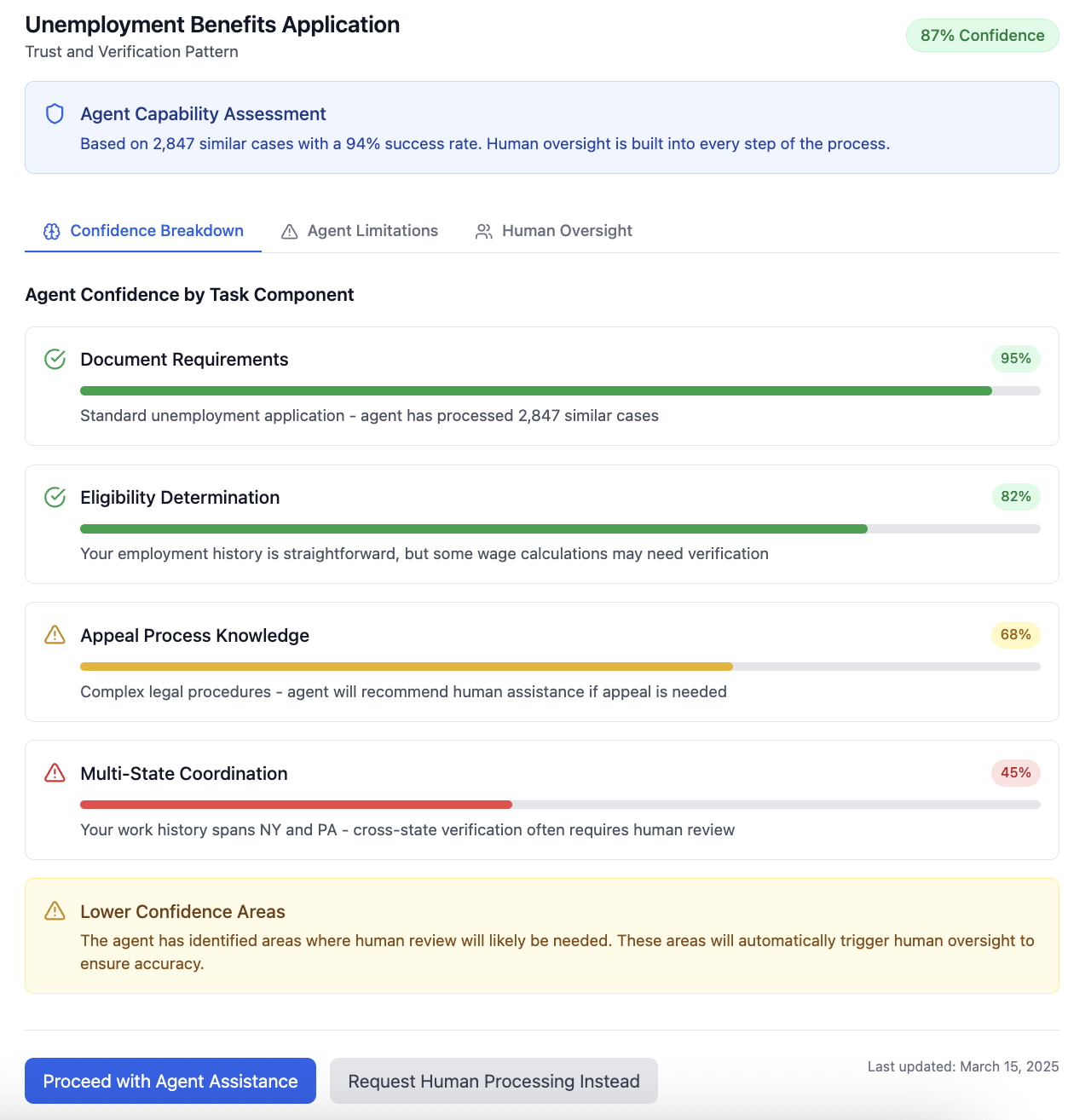

Trust and Verification Patterns: These patterns will establish the foundation of confidence necessary for individuals to delegate important government tasks to autonomous agents.

Confidence and capability indicators, required disclosure of agent limitations, and human-in-the-loop integration patterns will create clear expectations about agent capabilities and human oversight systems, addressing the unique accountability requirements for government services.

The example shown here provides transparency about agent capabilities, limitations, and human oversight before they delegate important tasks. Key elements include granular confidence indicators, explicit limitation disclosure, multi-level human oversight structure, and available escalation options. The public should be able to make informed decisions about delegation while understanding exactly what safeguards are in place.

Building a foundation amid uncertainty

The current hype around AI agents may not fully materialize, or it may move in a completely new direction, but government agencies can’t afford to ignore the delegation paradigm entirely. The administrative burden reduction potential is significant, and the design challenges are likely too different from current thinking to address in a reactive way.

By developing these design patterns now, government agencies can take concrete preparatory steps without committing to a specific technology or vendor. Agencies can begin by conducting (or continuing) user research to understand how people currently experience administrative burden in their processes, identifying which tasks they would be most willing to delegate, and testing the design patterns outlined here through pilot programs that simulate agent-like interactions using existing automation or human staff. This research-and-design approach will allow agencies to build institutional knowledge about delegation-based service design, validate people’s comfort levels with different types of autonomous action, and develop the policy frameworks and oversight structures that any delegation system will require — all while remaining technology-agnostic.

By developing and testing these design patterns now, government agencies prepare for multiple scenarios. If agentic technology matures as promised, agencies will have thoughtful frameworks for implementation. If the technology evolves differently, the patterns still address fundamental challenges of public-government delegation relationships. And if the current hype deflates entirely, agencies will have learned valuable lessons about designing for people’s autonomy and trust — insights that apply regardless of the underlying technology.

The opportunity to reduce administrative burden and democratize access to government services is real. Whether it comes through autonomous AI agents or some other technological evolution, government agencies that prepare now for delegation-based public interactions will be positioned to capture those benefits while maintaining the trust and accountability that democratic governance requires.

Related posts

- The modern agency toolkit

- Platforms and AI: A new approach to government software delivery

- Introducing a government design system for native mobile

- Become a champion for Section 508 compliance by using annotations

- Using human-centered design to improve customer experience: an AFCEA discussion

- Developers are humans too: Why API documentation needs HCD