Using generative AI to transform policy into code

The cornerstone of this year’s conference was a series of live demonstrations highlighting the use of artificial intelligence (AI) tools and platforms to improve the delivery of public benefits. The Policy2Code Prototyping Challenge was a months-long effort by 14 different teams from across the country (and one international team) to identify innovative new ways to use generative AI to improve public benefit delivery. Ad Hoc was honored earlier this year to be one of the teams selected to participate in this important program.

While many of the teams that took part in the challenge focused their efforts on enhancing the experience of applicants and beneficiaries or assisting benefit “navigators,” Ad Hoc’s team took a bit of a different approach. In our work on public benefit systems, one common area of friction that we have observed is that there is a lack of understanding and context between the people who are experts in (often complex) public benefit program policies and those who are experts in software system implementation. This lack of shared understanding can make new system implementation difficult, costly, and sometimes error-prone.

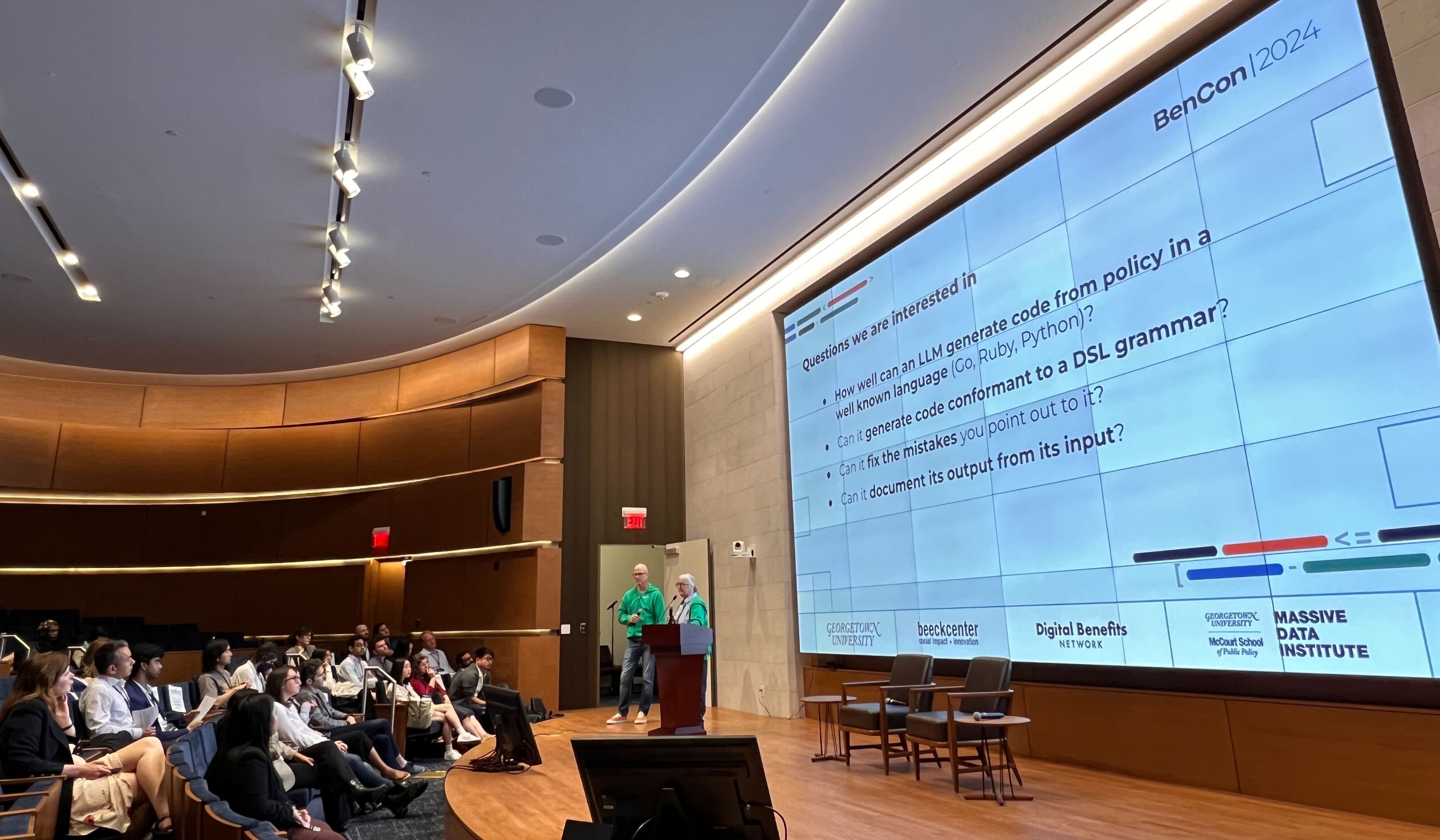

Our focus for the Policy2Code Prototyping Challenge was to develop ways to empower policy subject matter experts and place them as the ultimate arbiters of correctness in new system implementation. We did this by developing ways to use Large Language Models (LLMs) to generate an intermediate format between policy language and software code that policy experts and software engineers could use to create a shared understanding about what is required when public benefit program rules are implemented in a software system. This intermediate format is a domain specific language (DSL) that contains specific details about public benefit rules that policy experts and software engineers can both understand, and that empowers policy experts to more easily verify the correctness of a system implementation.

We spent the summer working with various LLM models to develop the tools and approaches needed to generate programs describing public benefit policies in our experimental DSL. At last week’s BenCon conference, our team was recognized for its work with an award for Outstanding Policy Impact. We believe that the approach we developed for the Policy2Code Prototyping Challenge holds enormous potential, and we’re excited to continue iterating on it.

Our team’s work was built on our experience working with public benefit agencies as well as our deep expertise in technology, human-centered design, and artificial intelligence tools. If you are interested in our work in these areas, don’t hesitate to reach out for more information.

Related posts

- Open data and AI readiness

- Parking signs and possible futures for LLMs in government

- How AI and LLMs will change government websites

- What AI and Large Language Models can bring to government

- Announcing the Platform Smells Playbook: Diagnose and fix common problems with your technology platform

- Using open-source LLMs to optimize government data