What does product thinking look like at Search.gov?

Meet one of the most unusual services in government: Search.gov powers the search experience for your favorite government websites, from the National Park Service to NASA, similar to how Google works for the broader internet. The search experience is often a person’s first interaction with a digital service, which is why it’s so important to get the experience right for users.

Part of getting that experience right involves using product management to drive the right outcomes for the problem we’re trying to solve. Ad Hoc is part of the Search.gov team led by Fearless in support of the General Services Administration. I’m the Product Manager for Search.gov, and it’s my job to help the entire team balance competing interests and manage difficult tradeoffs in order to best meet user needs, business goals, and policy constraints.

Search.gov is an enormous opportunity to improve interactions between government and the people it serves. However, like any tech product, there is more to build than we have resources for, so setting outcomes and understanding what to build (or not build) accordingly is key to a successful product. In this piece, we’ll walk through some of the tools and techniques we’ve used with our customers at GSA to help them see Search.gov as a product, rather than a technology project.

Evolving product thinking

Historically, the Search.gov team’s scope of work and needs was smaller, and the team was able to implement an agile approach internally, managed by staff largely within the GSA office. However, conditions changed: as oversight increased with the growing product, GSA staff had to attend to far more operational and administrative tasks than ever before. The product’s needs grew faster than their ability to implement additional product thinking.

Maturing the product management processes within Search.gov — a concerted effort by the whole team — helped us navigate the constant uncertainty and infrastructure upkeep that define the product. The first steps have been setting a north star, segmenting our users, and establishing a decision-making framework before tracking success.

Finding a common mission and direction

One key approach was to define a north star that tells us where Search.gov is headed, and what success looks like. Agreeing on this metric provides a common sense of direction and purpose. When we want to upgrade, build, or fix something, we ask ourselves: how does this align with our north star?

Search.gov’s north star is similar to many private-sector products: increase market share. As a taxpayer-funded product, efficient resource use is a key underlying principle for Search.gov, and what better way to track efficiency than to increase the number of websites using Search.gov (a free tool) in favor of paid search services? We can help agencies avoid tens of thousands of dollars in costs by transitioning them to Search.gov’s indexing technology.

Our north star metric has since been market share, the percentage of Search.gov traffic that uses Search.gov’s indexing technology.

Grouping customers by their needs

Similar to other software-as-a-service organizations, Search.gov has two user types. There are site administrators (who customize their agencies’ search experience) and searchers (who actually use the search boxes). User focus and segmentation is crucial because every feature should impact as many users as possible.

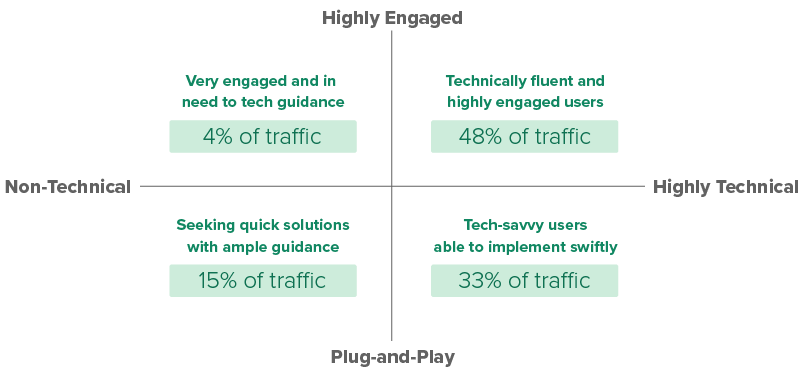

The team grouped our users into four broad categories along attributes of technical fluency and engagement. In segmenting these characteristics, we see that each quadrant accounts for a different level of traffic:

Each customer type asks something different of Search.gov. Historically, stakeholder requests have originated from the top right quadrant (highly engaged, highly technical agencies), but other user types comprise 52% of traffic. Building this chart has allowed us to recognize and seek new voices in the user feedback process. Before, we tallied customer requests for features. We now weigh customer requests by the amount of traffic they carry so that we can frame our thinking around improving as many customers’ experiences as possible.

Recently, we faced some trade-offs between improving our content indexing versus strengthening specific search functionalities. Segmenting our users enables us to steer towards our north star and prioritize work that would benefit more of our market, instead of catering to the most vocal customer.

Building a decision-making framework

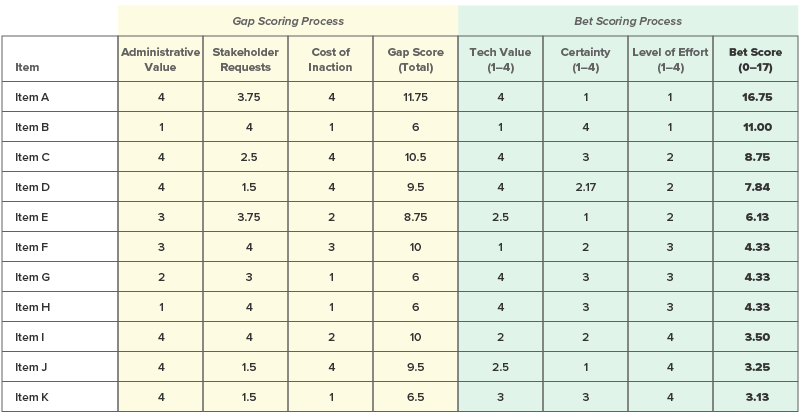

Another key to Search.gov’s product thinking is our decision-making approach. Similar to the RICE (Reach, Impact, Confidence, and Effort) model, Search.gov prioritizes its problem spaces through a gap-bet scoring process.

Gaps (the problems) are matched with bets (potential solutions) and then ranked in order of importance through a series of steps. In some cases, the bet is obvious. If the gap is “a version needs upgrading,” then the bet is “upgrade the version.” But in other cases — such as rebuilding our entire search results page — the path is less clear. Bets are ranked on (1) the tech value, (2) the level of effort, and (3) the certainty to their estimates.

Here’s our framework for the gap-bet scoring process:

1. **Find the gap.** The business side of the team takes a crack at defining the

problem. Each gap is a problem that we want to solve: what is the unmet need that Search.gov has identified?

2. **Assess the gap.** We score each gap on a scale of 0-12, summing business

value, the cost of inaction, and the weighted stakeholder requests (which allows us to slightly favor websites with more traffic).

3. **Rank the gaps.** After ordering our gaps by their scores, we pick the top

10-15% of gaps to discuss with our development team.

4. **Brainstorm bets.** With the development team, we brainstorm and assess how to

close the gaps. Bets are ranked on (1) tech value, (2) level of effort, and (3) certainty to the estimate.

5. **Score options.** Finally, we calculate a score using the following formula:

(gap score + tech value + certainty) / (level of effort).

It’s human nature to prioritize items we hear about more often or issues we see more frequently in our peripheral vision. For example, because our developers came across old pieces of our code base so often, the team’s instinct was that legacy code was the number one issue to address — and I was certain that this framework would surface that. But when we scored the gap, it actually ranked much lower than expected — and forced us into reflecting on what was actually our most urgent work.

Tracking success through metrics

Competing demands require that Search.gov default to “move forward as quickly as possible:” the dedicated team is constantly balancing high-profile stakeholder asks with the day-to-day demands of the product. Having more space to think about the product as a whole, though, has meant taking a step back to evaluate longer-term strategies through data, largely in the form of Objectives and Key Results (OKRs). Using data to measure and evaluate our progress has two primary impacts: (1) it helps us appreciate how far we’ve come, and (2) we understand which bets from the gap-bet process brought us real results. For example, to address all of our user segments, we’ve made infrastructure improvements a priority, and our performance metrics have improved drastically, with a 60% decrease in outages over the last two quarters.

Product thinking is a continuing process at Search.gov. Tracking metrics and prioritizing thoughtfully has allowed us to navigate the abstract and uncertain conditions better than we could have expected. The numbers suggest that we’re heading in the right direction, and we hope — with time and more targeted efforts — to implement more product-driven approaches alongside our prime contractor Fearless and our GSA customers.

Related posts

- How to use product operations to scale the impact of government digital services

- Improving government services, by design

- What it's like to be a product manager at Ad Hoc

- The new Ad Hoc Government Digital Services Playbook

- Four myths about applying human-centered design to government digital services

- Launching a government MVP in less than a month